At the company I work for (IMS), our python package story is somewhat similar to Plone itself in that it consists of a lot of library packages that aren't specific to one project. Some of these are available publicly on pypi and a public Github, but a lot of them are just in our private GitLab. It's possible to pip install directly from a branch or a tag, but this has serious limits. What I wanted is package releases.

For example, let's say I have a production environment where I pin ims.foo==1.2.1 and ims.bar==2.3.4. I'm working on an upgrade to ims.bar that requires ims.foo >= 1.3. Within ims.bar, I don't want to set the dependency specifically to a git tag at ims.foo==1.3 because I want that level of specificity to be up to the application (production environment). But as a backup and as a courtesy to other developers, I really want to say that ims.bar requires ims.foo >= 1.3. You can't do that with git tags, but you can do that with package releases (python wheels).

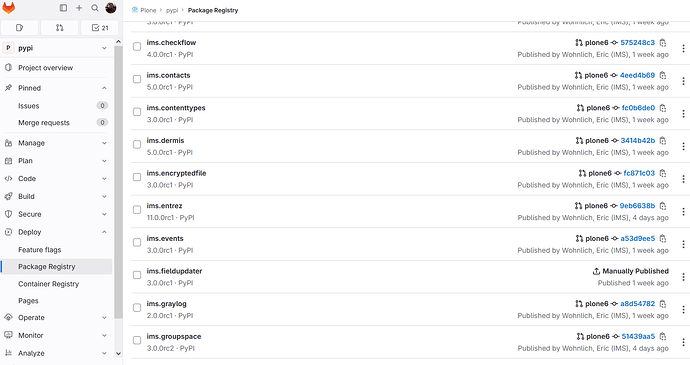

GitLab has a Package Registry feature available to each project. I don't actually want to have a separate registry for each package, I just want one for all of our Plone packages. So I set up a new project "pypi" to be our own, local pypi. Here's what it looks like after I populated it with a bunch of package releases.

To set this up, I use GitLab CI/CD. There's a lot going on with those pipelines that is mostly outside the scope of this post. The relevant section of the .gitlab-ci.yml file is:

stage: deploy

image: python:3.8

rules:

- if: $CI_COMMIT_BRANCH == 'master' || $CI_COMMIT_BRANCH == 'main'

before_script: []

script:

- pip install build twine

- pip install -e . --extra-index-url https://git.imsweb.com/api/v4/projects/1442/packages/pypi/simple

- PKG_VERSION=$(python -c "from importlib.metadata import version; print(version(\"${CI_PROJECT_NAME}\"))")

- git config user.email "${GITLAB_USER_EMAIL}"

- git config user.name "${GITLAB_USER_NAME}"

- git tag -a v${PKG_VERSION} -m "creating tag ${PKG_VERSION}"

- git push https://oauth2:${GITLAB_ACCESS_TOKEN}@git.imsweb.com/${CI_PROJECT_PATH}.git ${PKG_VERSION}

- python -m build

- TWINE_PASSWORD=${CI_JOB_TOKEN} TWINE_USERNAME=gitlab-ci-token python -m twine upload --repository-url ${CI_API_V4_URL}/projects/1442/packages/pypi dist/*

# Optional, for tag-less releases. Only use for pre-releases!

manual_release:

stage: deploy

image: python:3.8

rules:

- if: $CI_COMMIT_BRANCH == "plone6"

when: manual

allow_failure: true

before_script: []

script:

- pip install build twine

- python -m build

- TWINE_PASSWORD=${CI_JOB_TOKEN} TWINE_USERNAME=gitlab-ci-token python -m twine upload --repository-url ${CI_API_V4_URL}/projects/1442/packages/pypi dist/*

The first of these is our typical release workflow. When a branch is merged into main we automatically create a git tag for it, build the python wheel, and upload the wheel/zip using GitLab's package registry API. The second is for manual releases. I use this to create some pre-release versioned packages before I actually merge that branch into main. What's really nice about both of these is that all of the authorization is done via the CI_JOB_TOKEN created automatically for that job. No need to create and save an ssh key.

The picture from GitLab above is the human readable way to access those package releases. You can download and inspect from there but what I want to do is pip install these. GitLab stores these in a PYPI-style structure so pip can find them efficiently:

pip install ims.graylog --index-url https://git.imsweb.com/api/v4/projects/1442/packages/pypi/simple

Here 1442 is the id of my "pypi" package. Even better, you can put this in a pip.conf file:

[global]

extra-index-url = https://git.imsweb.com/api/v4/projects/1442/packages/pypi/simple

Pip docs talk about the location and scope of pip.conf files here Configuration - pip documentation v23.2.1. I use Docker to create an image used by our CI/CD jobs to run tests on a pre-cached Plone environment (again outside the scope of this!), and in that Dockerfile I copy in a pip.conf and set the PIP_CONFIG_FILE variable. On my local machine I just put it in my user directory, and this is what I tell the other devs to do as well. Creating that pip.conf is all they have to do to be able to install any of our internal packages, the same way they would install anything from pypi.org.

To wrap up, here's a sample dependencies section from one of my package's pyproject.toml that contains a mix of private packages in our GitLab and public packages on pypi:

dependencies = [

"plone",

"collective.z3cform.datagridfield>=3.0.0",

"ims.behaviors>=3.0.0-rc.1",

"ims.widgets>=4.0.0-rc.1",

"ims.zip>=5.0",

"graypy"

]

Of course the package doesn't know this, that's all deferred to pip configuration. But the goal for the package is achieved: be able to set a min (etc) version of dependencies.

CAVEAT: Using extra-index-url or index-url with pip does not prefer that index over default pypi if a package of the same name exists in both. So for example say I wanted to fork a public project and create my own private release of a change, but then the original repo updates and releases to pypi on the same version I was using. I can't tell it to use mine instead. I think in that case you would be better pointing to a git tag of your fork, if you can't actually contribute to the main project.