Actually, can you still double check you're not staticly assigning those cores to anything else? Would be silly if Plone CI builds step on the toes of your production VMs.

No, those are used by 2 VMs, the CI and one with very low CPU usage (most of the time idle).

Or, alternatively, do one container per server, and just let it use all the resources and only use the container for software isolation.

Running lxc exec CONTAINER_NAME lscpu before and after lxc config set CONTAINER_NAME limits.cpu 1 yields the exact same value ![]() either it is ignored, or

either it is ignored, or lscpu is not the right tool to check this. getconf _NPROCESSORS_ONLN also returns the total amount rather than the restricted amount ![]()

Big news!!

Thanks to @pbauer and @polyester (so the Foundation board in general) we have a new server available with 12 cores:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 12

On-line CPU(s) list: 0-11

Thread(s) per core: 2

Core(s) per socket: 6

Socket(s): 1

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 63

Model name: Intel(R) Xeon(R) CPU E5-1650 v3 @ 3.50GHz

Stepping: 2

CPU MHz: 1197.448

CPU max MHz: 3800.0000

CPU min MHz: 1200.0000

BogoMIPS: 6984.36

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 15360K

NUMA node0 CPU(s): 0-11

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm cpuid_fault epb invpcid_single pti intel_ppin ssbd ibrs ibpb stibp tpr_shadow vnmi flexpriority ept vpid fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms invpcid cqm xsaveopt cqm_llc cqm_occup_llc dtherm ida arat pln pts flush_l1d

I created two nodes on it (Node5 and Node6) with 6 executors each.

Have fun trying to make them busy

Sorry for the spam... Python 3.7 was readily installed on nodes for quite a while, but I forgot to enable it on Jenkins, well not anymore

If one wants to play with it, create a virtualenv build step and on the dropdown you will see Python 3.7 as an option.

I wouldn't expect any regression from 3.6, but anyhow, we can eventually test on both 3.6 as well as 3.7.

I expect regressions when using Python 3.7 instead of 3.6. (Zope does not yet support Python 3.7, too.)

Each supported Python version should be tested individually.

6 cores, not 12. We run the same hardware for our CI. This can run the non-robot tests in 10minutes.

We cannot containers. Let's do bare metal. Please. That'd also greatly simplify the executor queue design and the test splitting design.

I'd like to see 2.6 .. 2.7 and 3.4 .. 3.7 on the CI.

Ran some benchmarking as to how the most trivial parallelisation of the tests would work. Used Python 3.7.0 compiled with --enable-optimisations. The tests actually run on 3.7.0, which is a decent starting point.

lscpu | tee parallel.log && echo "" | tee -a parallel.log && git log -1 --format="%ai %d %H" | tee -a parallel.log && for n in $(seq 1 "$(getconf _NPROCESSORS_ONLN)"); do echo "" && echo "n = $n" && ROBOTSUITE_PREFIX=ROBOT bin/test --all -j "$n" -t '!ROBOT' 2>/dev/null | tail -n 1; done | tee -a parallel.log

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 12

On-line CPU(s) list: 0-11

Thread(s) per core: 2

Core(s) per socket: 6

Socket(s): 1

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 62

Model name: Intel(R) Xeon(R) CPU E5-1650 v2 @ 3.50GHz

Stepping: 4

CPU MHz: 1231.323

CPU max MHz: 3900.0000

CPU min MHz: 1200.0000

BogoMIPS: 6999.57

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 12288K

NUMA node0 CPU(s): 0-11

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc aperfmperf eagerfpu pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm epb ssbd ibrs ibpb stibp tpr_shadow vnmi flexpriority ept vpid fsgsbase smep erms xsaveopt dtherm ida arat pln pts spec_ctrl intel_stibp flush_l1d

2018-09-24 14:24:32 +0200 (HEAD -> 5.2, origin/5.2) 018ba5dc02f6ca7dd3e03f327fc4ff373e28c311

n = 1

Total: 9228 tests, 58 failures, 40 errors and 275 skipped in 29 minutes 3.867 seconds.

n = 2

Total: 9520 tests, 68 failures, 41 errors and 0 skipped in 28 minutes 1.659 seconds.

n = 3

Total: 9520 tests, 67 failures, 41 errors and 0 skipped in 19 minutes 15.774 seconds.

n = 4

Total: 9300 tests, 53 failures, 36 errors and 0 skipped in 14 minutes 25.575 seconds.

n = 5

Total: 9300 tests, 53 failures, 36 errors and 0 skipped in 11 minutes 51.595 seconds.

n = 6

Total: 9271 tests, 52 failures, 47 errors and 0 skipped in 10 minutes 27.564 seconds.

n = 7

Total: 9271 tests, 53 failures, 47 errors and 0 skipped in 10 minutes 58.729 seconds.

n = 8

Total: 9271 tests, 52 failures, 47 errors and 0 skipped in 10 minutes 52.678 seconds.

n = 9

Total: 9230 tests, 54 failures, 38 errors and 0 skipped in 10 minutes 33.451 seconds.

n = 10

Total: 9271 tests, 51 failures, 47 errors and 0 skipped in 10 minutes 24.175 seconds.

n = 11

Total: 9230 tests, 52 failures, 38 errors and 0 skipped in 10 minutes 1.881 seconds.

n = 12

Total: 9291 tests, 54 failures, 37 errors and 0 skipped in 9 minutes 56.034 seconds.

Splitting the non-Robot Python 3 runs into running 6 layers in parallel seems sane. The rest is then a bit of a puzzle as we'll have a mix of 4 core and 6 core machines.

What worries me the most is the varying test count across the runs, but I'll chalk that up to using 3.7.0 at this point.

With https://jenkins.plone.org/view/PLIPs/job/plip-py3.7 I created a copy of the Py3-Job that uses Python 3.7.

After that is done we can compare the results to https://jenkins.plone.org/view/PLIPs/job/plip-py3.

I had already fixed one issue with 3.7 (https://github.com/plone/borg.localrole/commit/26514d0) but there probably will be some more.

3.4 is not supported by Zope, so I see no need to test against this Python version.

3.5 will go end of live in 2020. I'd expect nobody to migrate his/her Plone project to this version.

Excellent, thank you. only doing 3.6 and 3.7 simplify the juggling act for making builds quicker a lot.

This also means f-strings and type annotations are something Plone could actually go for.

This will be possible after dropping Python 2 support. Zope will do so in Zope 5 but I do not think that this will happen before 2020.

Ha, caught it.

OSError: [Errno 48] Address already in use

There are other (multiples of) zServer layers than just the Robot tests.

@sunew did you take a look at dynamically picking a free port for zServer test layers?

There's three ways around to parallelising that too:

- Have it automatically pick ports

- Isolate all such layers into a separate sequential test run

- Use a wrapper to run the tests, which allocates the ports for the runs (this has unsolved aspects in regards to how to negotiate this between two test runs happening in parallel)

The sprint in Halle is starting today. We'll do a initial standup on Monday at 2pm CEST (12pm UTC) where where we create teams and distribute tasks. If you want to contribute from remote please join us in a hangout at https://hangouts.google.com/hangouts/_/event/ct8e35vjvc59n01sk5ckugfqto0

A quick update:

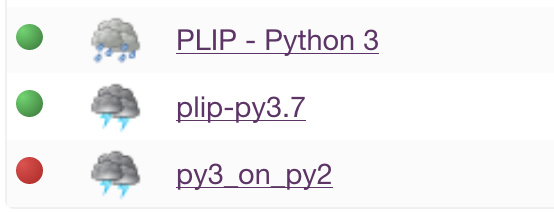

- We just had the first green builds for Python 3.6 and Python 3.7 \o/

- The py3_on_py2 job still has some remaining failing robot tests. That runs the whole py3-branches plus Archetypes in Python 2.7. https://jenkins.plone.org/view/PLIPs/job/py3_on_py2

- Zope 4.0b6 has been released. Updating our versions leads to some more issues that need to be fixed (see https://github.com/plone/Products.CMFPlone/issues/2590).

- Once 4.0b6 is used and all three jobs are green we will need to merge a lot of branches at the same time.

- The sprint in Halle was a huge success and we made a lot of progress. A report will be published soon (I was down with a nasty cold until yesterday)

Fantastic work Philip et. al!!! Thank you!

And now finally all builds are green. We plan the task necessary before a release of Plone 5.2a1 in:

I finally published a sprint report on the Saltlabs Sprint:

I'll cross-post it here so that any discussions do not have to go through our websites disqus:

Python 3, Plone 5.2, Zope 4 and more

Report from the SaltLabs Sprint in Halle, Germany and the aftermath

With over 30 attendees the SaltLabs Sprint was among the bigger sprints in the history of Plone and Zope.

Fortunately, our host Gocept just moved into a new office and had plenty of space to spare. Stylish as we are, the Plone crowd quickly congregated in the palace-like salon with red walls and renaissance paintings. Even better: Throughout the week the cafe Kaffej downstairs was open for us to eat breakfast, lunch and for work. We tried to keep the barista busy producing excellent Cappuccino. Thanks!

Zope 4

The sprint split in two groups, one working on Zope and one working on Plone. A short report on about what happened at the Zope can be found at https://blog.gocept.com/2018/10/11/beta-permission-for-earl-zope-extended . Zope 4.0b6 was released just after the sprint. The new Beta 6 includes the new bootstrap-styled ZMI, support for Python 3.7 and a metric ton of bug fixes compared to 4.0b5 which was released in May this year. By the way: Zope can now be installed without buildout using pipenv

Python 3

The Plone crowd was huge and worked on several tasks. The biggest task - and the main reason to join the sprint - was continuing to port Plone to Python 3. Jens Klein, Peter Mathis, Alessandro Pisa, David Glick (remote) and Philip Bauer as lead worked mainly on fixing all remaining failing tests and getting the jenkins-builds to pass. We dove deep into crazy test-isolation-issues but also added a couple of missing features to Dexterity that were so far only tested with Archetypes.

During the sprint we got very close to a green build but a couple of really nasty test-isolation issues prevented a green build. A week after the sprint we finally got that done and all tests of Plone now pass in Python 2.7, Python 3.6 and Python 3.7 with the same code-base!

With the test passing we're now merging that work and preparing the release of Plone 5.2a1. The tasks for this are discussed in this ticket

Deprecate Archetypes

Archetypes will not be ported to Python 3 but still has to work when running Plone 5.2 in Python 2.7. Since all tests that used PloneTestCase were still using Archetypes Philip changed that to use Dexterity now and created a new testlayer (plone.app.testing.bbb_at.PloneTestCase) that is now used by all the packages using the Archetypes-stack. See https://github.com/plone/plone.app.testing/pull/51. You could use this layer in your archetypes-based addon-packages that you want to port to Plone 5.2.

Addons

Yes, Plone is now able to run on Python 3. But without the huge ecosystem of addons that would be of limited real-world use. Jan Mevissen, Franco Pellegrini (remote) and Philip Bauer ported a couple of addons to Python 3 that Plone developers use all the time:

- plone.reload: https://github.com/plone/plone.reload/pull/9

- Products.PDBDebugMode: https://github.com/collective/Products.PDBDebugMode/pull/8

- plone.app.debugtoolbar: https://github.com/plone/plone.app.debugtoolbar/pull/20

- Products.PrintingMailHost: https://github.com/collective/Products.PrintingMailHost/pull/6

- mr.developer: https://github.com/fschulze/mr.developer/pull/193

Being able to run tests against Plone in Python 3, and with these development-tools now available also, it is now viable to start porting other addons to Python 3. To find out how hard that is I started porting collective.easyform: https://github.com/collective/collective.easyform/pull/129. The work is only 97% finished since some tests need more work but it is now useable in Python 3!

ZODB Migration

Harald Friesenegger and Robert Buchholz worked on defining a default migration-story for existing databases using `zodbupdate (https://github.com/zopefoundation/zodbupdate). To discuss the details of this approach we had a hangout with Jim Fulton, David Glick and Sylvain Viollon. They solved a couple of tricky issues and wrote enough documentation. This approach seems to work well enough and the documentation points out some caveats but the ZODB migration will require some more work.

Relevant links:

- Meta-Ticket: https://github.com/plone/Products.CMFPlone/issues/2525

- Upgrade-Guide: https://github.com/plone/documentation/blob/5.2/manage/upgrading/version_specific_migration/upgrade_to_python3.rst#database-migration

- Prepared Buildout: https://github.com/frisi/coredev52multipy/tree/zodbupdate

ZODB Issues

Sune Broendum Woeller worked on a very nasty and complex issue with KeyErrors raising while releasing resources of a connection when closing the ZODB. That happened pretty frequently in our test-suites but was so far unexplained. He analyzed the code and finally was able to add a failing test to prove his theory. Then Jim Fulton realized the problem and wrote a fix for it. This will allow us to update to the newest ZODB-version once it is released. See https://github.com/zopefoundation/ZODB/issues/208 for details.

WSGI-Setup

Thomas Schorr added the zconsole module to Zope for running scripts and an interactive mode. Using WSGI works in Python 2 and Python 3 and will replace ZServer in Python 3.

Frontend and Theming

Thomas Massman, Fred van Dijk, Johannes Raggam and Maik Derstappen looked into various front-end issues, mainly with the Barceloneta theme. They closed some obsolete tickets and fixed a couple of bugs.

They also fixed some structural issues within the Barceloneta theme. The generated HTML markup now has the correct order of the content columns (main, then left portlets, then right portlets) which allows better styling for mobile devices. Also in the footer area we are now able to add more portlets to generate a nice looking doormat. See the ticket https://github.com/plone/plonetheme.barceloneta/pull/163 for screenshots and details.

They also discussed a possible enhancement of the Diazo based theming experience by including some functionality of spirit.plone.theming into plone.app.theming and cleaning up the Theming Control Panel. A PLIP will follow for that.

New navigation with dropdown support

Peter Holzer (remote) continued to work on the new nativation with dropdown-support which was started on collective.navigation by Johannes Raggam. Due to the new exclude_from_nav index and optimized data structures the new navigation is also much faster than other tree based navigation solutions (10x-30x faster based on some quick tests).

Static Resource Refactoring

Johannes Raggam finished work on his PLIP to restructure static resources.

With this we no longer carry 60MB of static resources in the CMFPlone repository, it allows to use different versions of mockup in Plone, enable us to release our libraries on npm and will make it easier to switch to a different framework.

Skin scripts removal

Katja Süss, Thomas Lotze, Maurits van Rees and Manuel Reinhardt worked hard at removing the remaining python_scripts. The work is still ongoing and it would be great to get rid of the last of these before a final Plone 5.2 release. See https://github.com/plone/Products.CMFPlone/issues/1801 for details. Katja also worked on finally removing the old resource registry (for js and css).

Test parallelization

Joni Orponen made a lot of progress on speeding up our test-runs by running different test-layers in parallel. The plan is to get them from 30-60 minutes (depending on server and test-setup) to less than 10 minutes. For a regularly updated status of this work see CI run speedups for buildout.coredev

Documentation and User-Testing

Paul Roeland fought with robot-tests creating the screenshots for our documentation. And won. A upgrade guide to Plone 5.2 and Python 3 was started by several people. Jörg Zell did some user-testing of Plone on Python 3 and documented some errors that need to be triaged.

Translations

Jörg Zell worked on the german translations for Plone and nearly got to 100%. After the sprint Katja Süß did an overall review of the german translation and found some wording issues with need for a discussion

Plone React

Rob Gietema and Roel Bruggink mostly worked on their trainings for React and Plone-react, now renamed to “Volto”. Both will be giving these trainings at Plone Conference in Tokyo. On the second day of the sprint Rob demoed the current state of the new react-based frontend for Plone.

Assorted highlights

- In a commit from 2016 an invisible whitespace was added to the doctests of plone.api. That now broke our test in very obscure ways. Alessandro used some dark magic to search and destroy.

- The

__repr__for persistent objects changed breaking a lot of doctests. We still have to figure out how to deal with that. See https://github.com/zopefoundation/zope.site/issues/8 for details. - There was an elaborate setup to control the port during robot-tests. By not setting a port at all the OS actually takes care of this to makes sure the ports do not conflict. See https://github.com/plone/plone.app.robotframework/pull/86. Less is sometimes more.

- Better late than never: We now have a method

safe_nativestringinProducts.CMFPlone.utilsbesides our all-time favoritessafe_unicodeandsafe_encode. It transforms to string (which is bytes in Python 2 and text in Python 3). By the way: There is alsozope.schema.NativeStringandzope.schema.NativeStringLine. - We celebrated the 17th birthday of Plone with a barbecue and a generous helping of drinks.

I guess most people know already but: Plone 5.2a1 was released in Tokyo. That means Plone now supports Python 3

I wrote a blogpost with a update:

The super-short version of that blogpost:

- A final release is scheduled for February

- You should start planning your migration now

- Many developer-tools and some important addons already support Python 3

- There is more work to be done regarding migration, documentation and testing